“Hey, is this you?”

Bunni gets these DMs often — random alerts from strangers flagging phony profiles mimicking her online. As an OnlyFans creator, she’s learned to live with the exhausting, infuriating cycle of impersonation that comes with the territory. Five years in, she knows the drill.

But this time felt different. The account in question hit too close. The photo? No doubt, it’s her shirt, her tattoos, her room. Everything checks out, but that is not her face.

A reverse deepfake

What’s happening to Bunni is one of the more unusual — and unsettling — evolutions of deepfake abuse. Deepfakes, typically AI-generated or AI-manipulated media, are most commonly associated with non-consensual porn involving celebrities, where a person’s face is convincingly grafted onto someone else’s body. This form of image-based sexual exploitation is designed to humiliate and exploit, and it spreads quickly across porn sites and social platforms. One of the most prominent hubs for this kind of content, Mr. Deepfake, recently shut down after a key service provider terminated support, cutting off access to its infrastructure.

The shutdown happened a week after Congress passed the “Take It Down Act,” a bill requiring platforms to remove deepfake and revenge porn content within 48 hours of a takedown request. The legislation, expected to be signed into law by President Donald Trump, is part of a broader push to regulate AI-generated abuse.

But Bunni’s case complicates the conversation. This isn’t a matter of her face being pasted into explicit content — she’s already an OnlyFans creator. Instead, her photos were digitally altered to erase her identity, repackaged under a different name, and used to build an entirely new persona.

Chasing an AI catfisher — Bunni’s situation

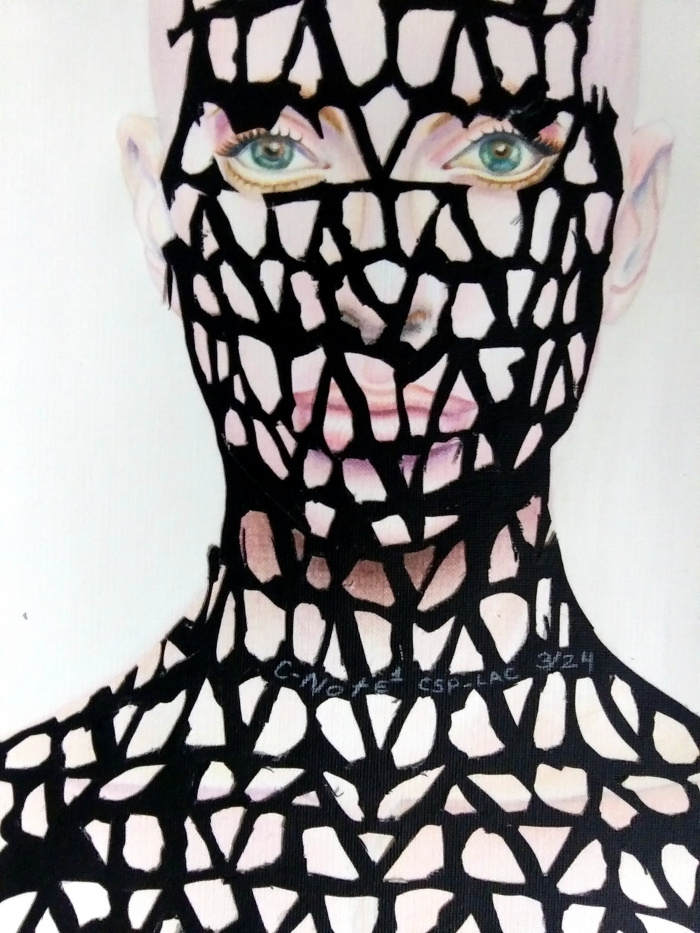

In February, Bunni posted a video to Instagram. The video showed a surreal side-by-side: the real Bunni pointing at a picture from a Reddit post that barely resembled her. The fake image had been meticulously scrubbed of many of her defining features — the facial piercings gone, her dark hair lightened, her expression softened. In their place was a face engineered for anonymity: big green eyes, smooth skin, and sanitized alt-girl aesthetics.

Credit: Screenshot from Instgram user @bunnii_squared

Credit: Screenshot from Instgram user @bunnii_squared

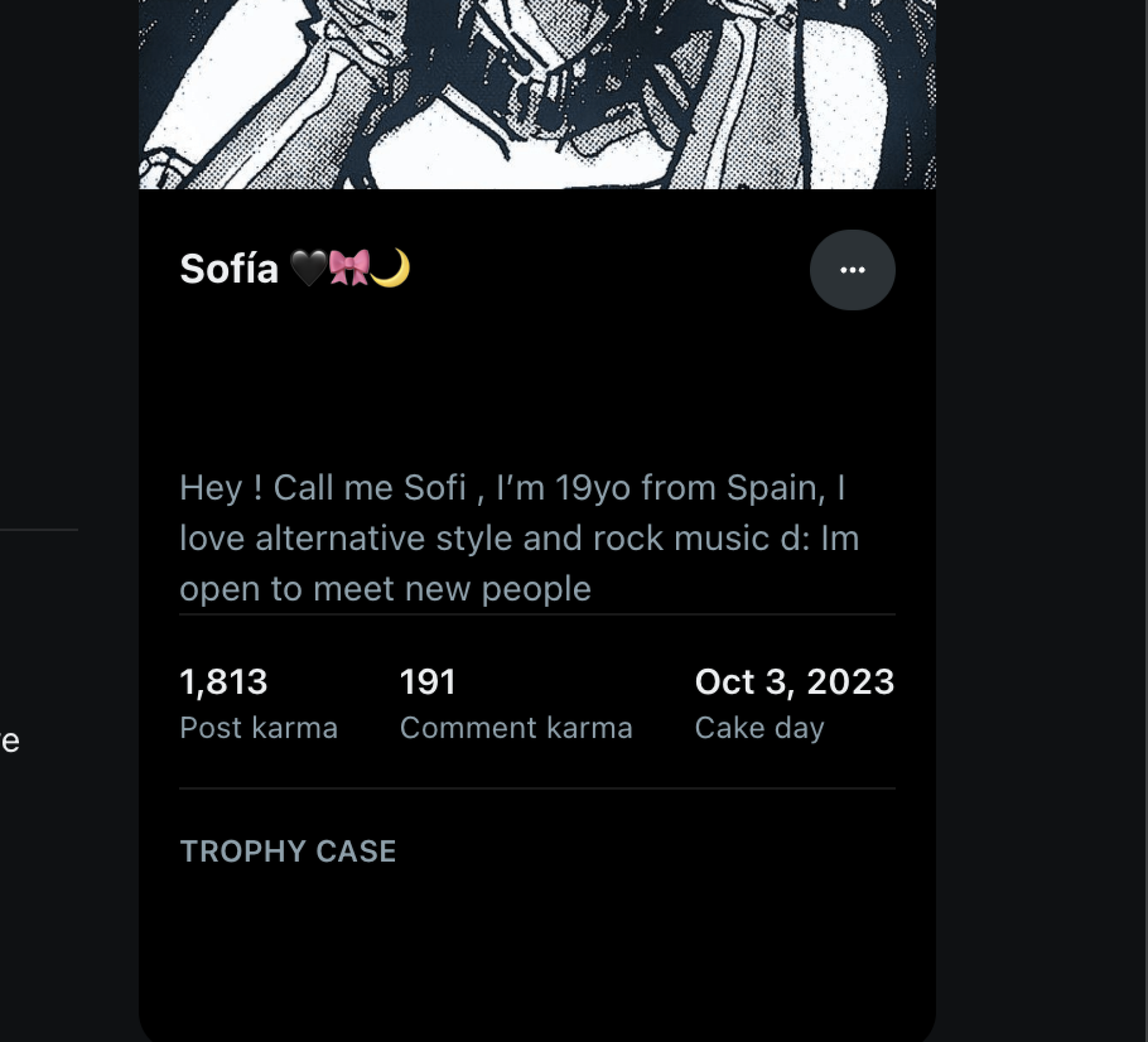

The Reddit profile, now deleted but partially resurrected via the Wayback Machine, presented “Sofía”: a self-proclaimed 19-year-old from Spain with an “alt style” and a love of rock music, who was “open to meeting new people.” Bunni is 25 and lives in the UK. She is not, and has never been, Sofía.

Credit: Screenshot from Wayback Machine

“I’m so used to my content being stolen,” Bunni told Mashable. “It kind of just happens. But this was like — such a completely different way of doing it that I’ve not had happen to me before. It was just, like, really weird.”

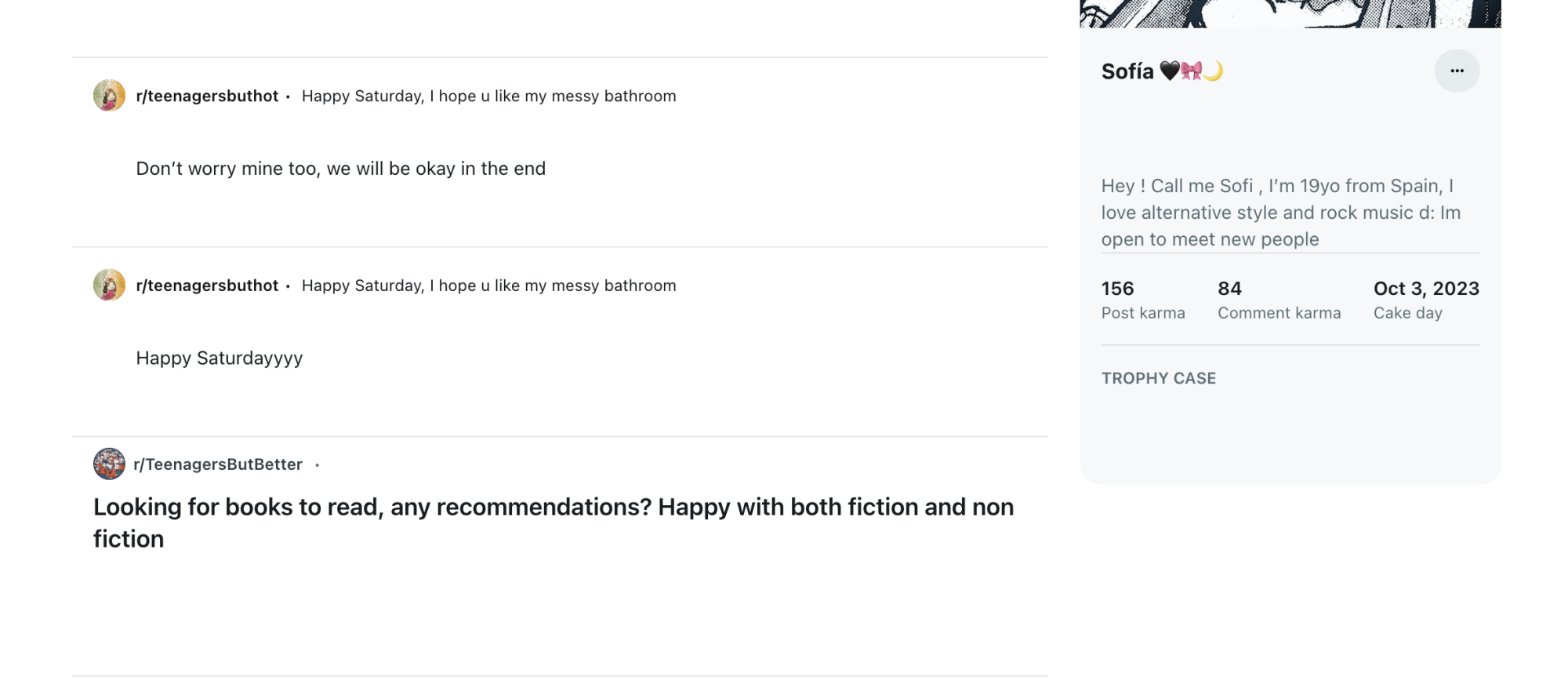

It gets weirder. The Sofía account, which first popped up in October 2023, started off innocently enough, posting to feel-good forums like r/Awww. But soon, it migrated to more niche — and more disconcerting — subreddits like r/teenagers, r/teenagersbutbetter, and r/teenagersbuthot. The latter two, offshoots of the main subreddit, exist in an irony-pilled gray zone with more than 200,000 combined members.

Credit: Screenshot from Wayback Machine

Using edited selfies lifted from Bunni’s socials, the account posted under the guise of seeking fashion advice, approval, and even photos of her pets.

“Do my outfits look weird?” one caption asked under a photo of Bunni trying on jeans in a fitting room.

“I bought those jeans,” Bunni recalled. “What do you mean?”

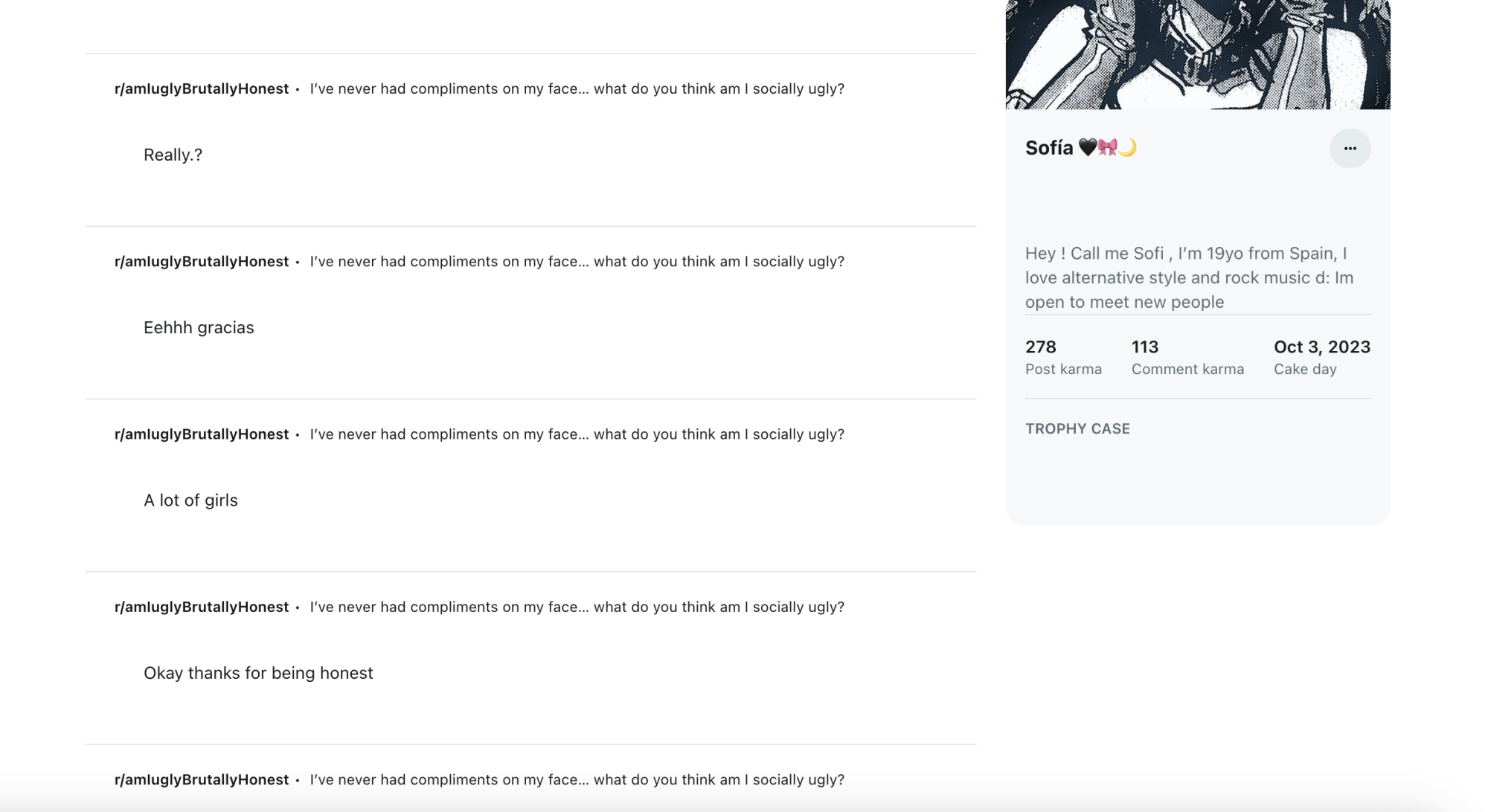

But the game wasn’t just about playing dress-up. The Sofía persona also posted in r/FaceRatings and r/amiuglyBrutallyHonest, subreddits where users rate strangers’ attractiveness with brutal candor. The likely motive is more than likely building credibility and validation.

Credit: Screenshot from Wayback Machine

The final stage of the impersonation edged toward adult content. In the last archived snapshot of the account, “Sofía” had begun posting in subreddits like r/Selfie — a standard selfie forum where NSFW images are prohibited, but links to OnlyFans accounts in user profiles are allowed — and r/PunkGirls, a far more explicit space featuring a mix of amateur and professional alt-porn. One Sofía post in r/PunkGirls read: “[F19] finally posting sexy pics in Reddit, should I keep posting?” Another followed with: “[F19] As you all wanted to see me posting more.”

![Screenshot of a Reddit post in r/PunkGirls from user “Sofía 🖤🎀🌙” with the title “[F19] finally posting sexy pics in Reddit , should I keep posting?](https://theonlyfanslife.com/wp-content/uploads/2025/05/images-4.fill_.size_2000x1109.v1747245191.png)

Credit: Screenshot from Wayback Machine

The last post from the account was in an r/AskReddit thread describing the weirdest sexual experience they’ve ever had.

Credit: Screenshot from Wayback Machine

Bunni surmised that the endgame was likely a scam targeting men, tricking them into buying nudes, potentially lifted from her own OnlyFans. The profile itself did not post links to outside platforms like Snapchat or OnlyFans, but she suspects the real activity happened in private messages.

“What I imagine they’ve done is they’ll be posting in SFW subreddits, using SFW pictures, and then messaging people that interact with them and being like, ‘Oh, do you want to buy my content’ — but it’s my content with the face replaced,” she said.

Fortunately for Bunni, after reaching out to moderators on r/teenagers, the impersonator’s account was removed for violating Reddit’s terms of service. But the incident raises a larger, murkier question: How often do incidents like this — involving digitally altered identities designed to evade detection — actually occur?

Popular-but-not-famous creators are the perfect targets

In typical cases of stolen content, imposters might repost images under Bunni’s name or under a fake name, which catfishers do. But this version was more sophisticated. By altering her face — removing piercings, changing eye shape, subtly shifting features — the impersonator appeared to be taking steps to avoid being identified by followers, friends, or even reverse image searches. It wasn’t just identity theft. It was identity obfuscation.

Reddit’s Transparency Report from the second half of 2024 paints a partial picture. The platform removed 249,684 instances of non-consensual intimate media and just 87 cases flagged specifically as impersonation. But that data only reflects removals by Reddit’s central trust and safety team. It doesn’t include content removed by subreddit moderators — unpaid volunteers who enforce their own community-specific rules. Mods from r/teenagers and r/amiugly, two of the subreddits where “Sofía” had been active, said they couldn’t recall a similar incident. Neither keep formal records of takedowns nor reasons for removal.

Reddit declined to comment when Mashable reached out regarding this story.

If Trump signs the “Take It Down Act” into law, platforms will soon be required to remove nonconsensual intimate imagery within 48 hours.

It’s not hard to see why creators like Bunni would be the ideal target for an impersonator like this. As an OnlyFans creator with a multi-year presence on platforms like Instagram, TikTok, and Reddit, Bunni has amassed a vast archive of publicly available images — a goldmine for anyone looking to curate a fake persona with minimal effort. And because she exists in the mid-tier strata of OnlyFans creators — popular, but not internet-famous — the odds of a casual Reddit user recognizing her are low. For scammers, catfishers, and trolls, that sweet spot of visibility-without-virality makes her the perfect mark: familiar enough to seem real, obscure enough to stay undetected.

More troubling is the legal ambiguity surrounding this kind of impersonation. According to Julian Safarian, a California-based attorney who represents online content creators, likenesses are protected under U.S. copyright law, and potentially even more so under California’s evolving deepfake regulations.

“It gets complicated when a creator’s likeness is modified,” Safarian explained. “But if a reasonable person can still recognize the original individual, or if the underlying content is clearly identifiable as theirs, there may still be grounds for legal action.”

Because a Reddit user recognized the edited photos as Bunni’s, Safarian says she could potentially bring a case under California law, where Reddit is headquartered.

But Bunni says the cost of pursuing justice simply outweighs the benefits.

“I did get some comments like, ‘Oh, you should take legal action,’” she said. “But I don’t feel like it’s really worth it. The amount you pay for legal action is just ridiculous, and you probably wouldn’t really get anywhere anyway, to be honest.”

AI impersonation isn’t going away

While this may seem like an isolated incident — a lone troll with time, access to AI photo tools, and poor intentions — the growing accessibility of AI-powered editing tools suggests otherwise. A quick search for “AI face swap” yields a long list of drag-and-drop platforms capable of convincingly altering faces in seconds — no advanced skills required.

“I can’t imagine I’m the first, and I’m definitely not the last, because this whole AI thing is kind of blowing out of proportion,” Bunni said. “So I can’t imagine it’s going to slow down.”

Ironically, the fallout didn’t hurt her financially. If anything, Bunni said, the video she posted exposing the impersonation actually boosted her visibility. But that visibility came with its own cost — waves of victim-blaming and condescending commentary.

“It’s shitty guys that are just on Instagram that are like, ‘You put this stuff out there, this is what you get, it’s all your fault,’” she said. “A lot of people don’t understand that you own the rights to your own face.”

Have a story to share about a scam or security breach that impacted you? Tell us about it. Email [email protected] with the subject line “Safety Net” or use this form. Someone from Mashable will get in touch.

This post was originally published on this site be sure to check out more of their content.